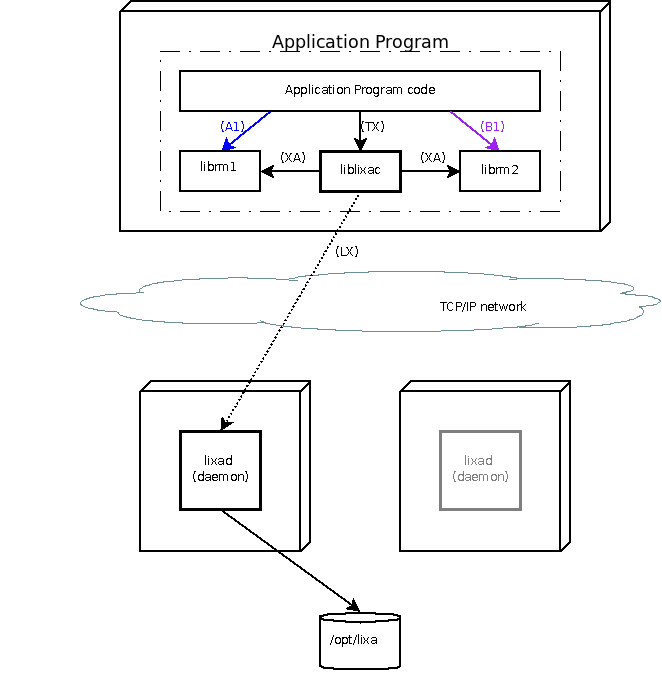

If you were using LIXA software project in a mission critical environment, you should set-up an high availability configuration. If you take a look to Figure 3.1, “Typical LIXA topology” you will notice the lixad daemon is a single point of failure: if the system hosting lixad crashed, the Application Program could not perform distributed transaction processing due to the unavailability of lixad daemon.

To avoid the issues related to “single point of failure pathology” the suggested configuration is “Active/passive” as described in Wikipedia “High-availability cluster” page. You can use:

any cluster manager, for example Linux-HA Heartbeat

any shared disk technology like SAN (Storage Area Network), NAS (Network Attached Storage) or disk replication technology like DR:BD

lixad requires that the filesystem and the

block device support mmap(),

munmap() and msync() functions.

The faster the filesystem/block device you are using, the better the

lixad performance.

The easiest high-availability configuration uses:

a shared/mirrored disk containing the LIXA file tree (

/opt/lixaif you are using a default installation)a service dedicated IP address that must be activated on the host servicing LIXA

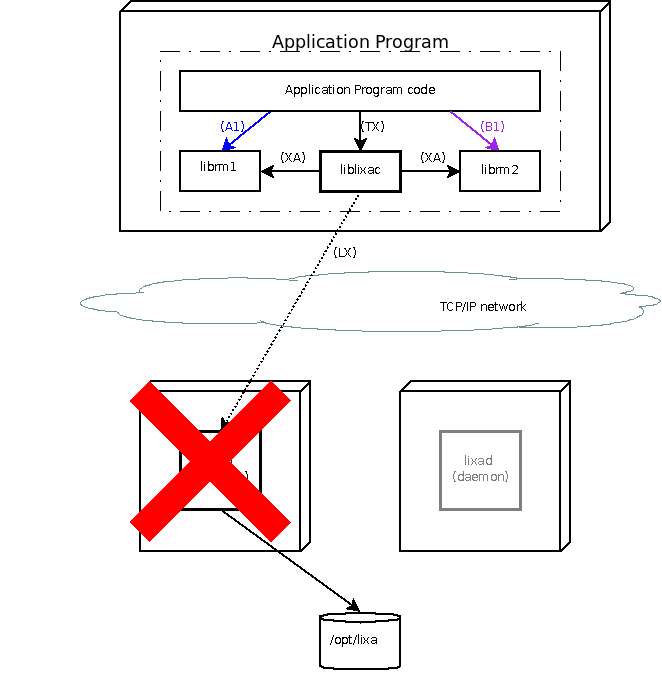

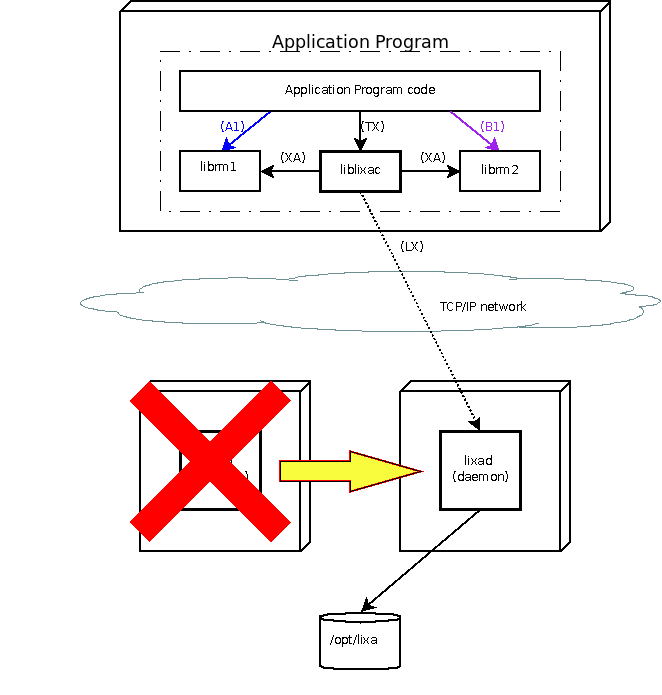

The following pictures show an high availability configuration in action:

Note

If you put all the LIXA installation files

(/opt/lixa) in the shared disk you will not

be able to run LIXA utility program from the node that's not owning

the shared disk: this should not be an issue if your active/passive

cluster hosts only lixad.

If you were using a most complicated configuration, it might be

preferable to put only /opt/lixa/var and

/opt/lixa/etc in the shared disk. You could

implement such a configuration using symbolic links or customizing

the configure procedure with

--sysconfdir=DIR and

--localstatedir=DIR

options. Use ./configure --help

for more details.